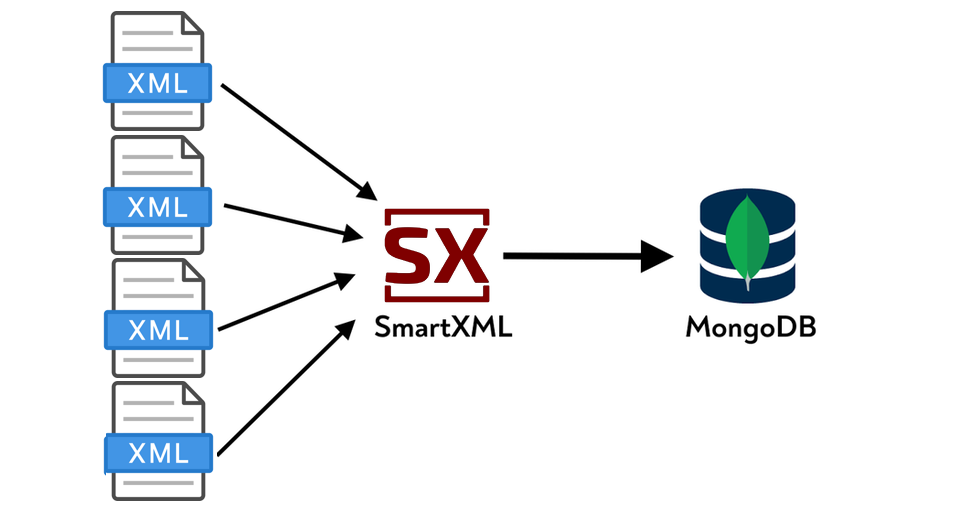

There are many situations where you may need to export data from XML to MongoDB.

Despite the fact that XML and JSON(B) formats used in MongoDB have much in common, they also have a number of differences that make them non-interchangeable.

Therefore, before you face the task of exporting data from XML to MongoDB, you will need to:

- Write your own XML parsing scripts;

- Use ETL tools.

Although modern language models can write parsing scripts quite well in languages like Python, these scripts will have a serious problem — they won’t be unified. For each file type, modern language models will generate a separate script. If you have more than one type of XML, this already creates significant problems in maintaining more than one parsing script.

The above problem is usually solved using specialized ETL tools. In this article, we will look at an ETL tool called SmartXML. Although SmartXML also supports converting XML to a relational representation we will only look at the process of uploading XML into MongoDB.

The actual XML can be extremely large and complex. This article is an introductory article, so we will dissect a situation in which:

- All XML has the same structure;

- The logical model of the XML is the same as the storage model in MongoDB;

- Extracted fields don’t need complex processing;

We’ll cover those cases later, but first, let’s examine a simple example:

In this example, we will upload in the MongoDB only the fields that serve a practical purpose, rather than the entire XML.

Create a New Project

It is recommended to create a new project from the GUI. This will automatically create the necessary folder structure and parsing rules. A full description of the project structure can be found in the official documentation.

All parameters described in this article can be configured in graphical mode, but for clarity, we will focus on the textual representation.

In addition to the config.txt file with project settings, job.txt for batch work, the project itself consists of:

- Template of intermediate internal

SmartDOMview, located in the project foldertemplates/data-templates.red. - Rules for processing and transformation of

SmartDOMitself, located in therulesfolder.

Let’s consider the structure of data-templates.red:

#[

sample: #[

marketing_data: #[

customers: [

customer: [

name: none

email: none

purchases: [

purchase: [

product: none

category: none

price: none

store: none

location: none

purchase_date: none

]

]

]

]

]

]

]Note

- The name

sampleis the name of the category, and it doesn’t matter. - The

marketing_datais the name of the subcategory. We need at least one code subcategory (subtype). - The intermediate view names don’t require exact matches with XML tag names. In this example, we intentionally used the

snake_casestyle.

Extract Rules

The rules are located in the rules directory in the project folder.

When working with MongoDB we will only be interested in two rules:

tags-matching-rules.red— sets the matches between the XML tag tree and SmartDOMgrow-rules.red— describes the relationship between SmartDOM nodes and real XML nodes

sample: [

purchase: ["purchase"]

customer: ["customer"]

]The key will be the name of the node in SmartDOM; the value will be an array containing the node spelling variants from the real XML file. In our example, these names are the same.

Ignored Tags

To avoid loading minor data into MongoDB in the example above, we create files in the ignores folder — one per section, named after each section. These files contain lists of tags to skip during extraction. For our example, we’ll have a sample.txt file containing:

["marketingData" "customer" "lessImportantInfo" "browser"]

["marketingData" "customer" "lessImportantInfo" "deviceType"]

["marketingData" "customer" "lessImportantInfo" "newsletterSubscribed"]As a result, when analyzing morphology, the intermediate representation will take the next form:

customers: [

customer: [

name: "John Smith"

email: "john.smith@example.com"

loyalty_status: "Gold"

age: "34"

gender: "Male"

membership_id: "123456"

purchases: [

purchase: [

product: "Smartphone"

category: "Electronics"

price: "700"

store: "TechWorld"

location: "New York"

purchase_date: "2025-01-10"

]

]

]

]Note that after morphological analysis, only a minimal representation is shown containing data from the first found nodes.

Here’s the JSON file that will be generated:

{

"customers": [

{

"name": "John Smith",

"email": "john.smith@example.com",

"loyalty_status": "Gold",

"age": "34",

"gender": "Male",

"membership_id": "123456",

"purchases": [

{

"product": "Smartphone",

"category": "Electronics",

"price": "700",

"store": "TechWorld",

"location": "New York",

"purchase_date": "2025-01-10"

},

{

"product": "Wireless Earbuds",

"category": "Audio",

"price": "150",

"store": "GadgetStore",

"location": "New York",

"purchase_date": "2025-01-11"

}

]

},

{

"name": "Jane Doe",

"email": "jane.doe@example.com",

"loyalty_status": "Silver",

"age": "28",

"gender": "Female",

"membership_id": "654321",

"purchases": [

{

"product": "Laptop",

"category": "Electronics",

"price": "1200",

"store": "GadgetStore",

"location": "San Francisco",

"purchase_date": "2025-01-12"

},

{

"product": "USB-C Adapter",

"category": "Accessories",

"price": "30",

"store": "TechWorld",

"location": "San Francisco",

"purchase_date": "2025-01-13"

},

{

"product": "Keyboard",

"category": "Accessories",

"price": "80",

"store": "OfficeMart",

"location": "San Francisco",

"purchase_date": "2025-01-14"

}

]

},

{

"name": "Michael Johnson",

"email": "michael.johnson@example.com",

"loyalty_status": "Bronze",

"age": "40",

"gender": "Male",

"membership_id": "789012",

"purchases": [

{

"product": "Headphones",

"category": "Audio",

"price": "150",

"store": "AudioZone",

"location": "Chicago",

"purchase_date": "2025-01-05"

}

]

},

{

"name": "Emily Davis",

"email": "emily.davis@example.com",

"loyalty_status": "Gold",

"age": "25",

"gender": "Female",

"membership_id": "234567",

"purchases": [

{

"product": "Running Shoes",

"category": "Sportswear",

"price": "120",

"store": "FitShop",

"location": "Los Angeles",

"purchase_date": "2025-01-08"

},

{

"product": "Yoga Mat",

"category": "Sportswear",

"price": "40",

"store": "FitShop",

"location": "Los Angeles",

"purchase_date": "2025-01-09"

}

]

},

{

"name": "Robert Brown",

"email": "robert.brown@example.com",

"loyalty_status": "Silver",

"age": "37",

"gender": "Male",

"membership_id": "345678",

"purchases": [

{

"product": "Smartwatch",

"category": "Wearable",

"price": "250",

"store": "GadgetPlanet",

"location": "Boston",

"purchase_date": "2025-01-07"

},

{

"product": "Fitness Band",

"category": "Wearable",

"price": "100",

"store": "HealthMart",

"location": "Boston",

"purchase_date": "2025-01-08"

}

]

}

]

}Configuring Connection to MongoDB

Since MongoDB doesn’t support direct HTTP data insertion, an intermediary service will be required.

Let’s install the dependencies: pip install flask pymongo.

The service itself:

from flask import Flask, request, jsonify

from pymongo import MongoClient

import json

app = Flask(__name__)

# Connection to MongoDB

client = MongoClient('mongodb://localhost: 27017')

db = client['testDB']

collection = db['testCollection']

@app.route('/insert', methods=['POST'])

def insert_document():

try:

# Flask will automatically parse JSON if Content-Type: application/json

data = request.get_json()

if not data:

return jsonify({"error": "Empty JSON payload"}), 400

result = collection.insert_one(data)

return jsonify({"insertedId": str(result.inserted_id)}), 200

except Exception as e:

import traceback

print(traceback.format_exc())

return jsonify({"error": str(e)}), 500

if __name__ == '__main__':

app.run(port=3000)

We’ll set up the MongoDB connection settings in the config.txt file (see nosql-url):

job-number: 1

root-xml-folder: "D:/data/data-samples"

xml-filling-stat: false ; table: filling_percent_stat should exists

ignore-namespaces: false

ignore-tag-attributes: false

use-same-morphology-for-same-file-name-pattern: false

skip-schema-version-tag: true

use-same-morphology-for-all-files-in-folder: false

delete-data-before-insert: none

connect-to-db-at-project-opening: true

source-database: "SQLite" ; available values: PostgreSQL/SQLite

target-database: "SQLite" ; available values: PostgreSQL/SQLite/NoSQL

bot-chatID: ""

bot-token: ""

telegram-notifications: true

db-driver: ""

db-server: "127.0.0.1"

db-port: ""

db-name: ""

db-user: ""

db-pass: ""

sqlite-driver-name: "SQLite3 ODBC Driver"

sqlite-db-path: ""

nosql-url: "http://127.0.0.1: 3000/insert"

append-subsection-name-to-nosql-url: false

no-sql-login: "" ; login and pass are empty

no-sql-pass: ""

Remember that MongoDB will automatically create a database and a collection of the same name if they do not exist. However, this behavior may cause errors, and it is recommended to disable it by default.

Let’s run the service itself:

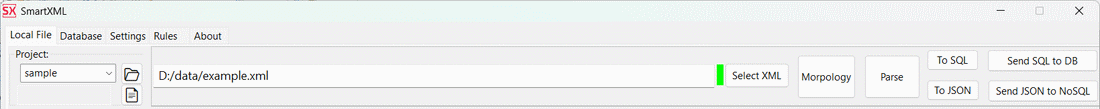

Next, click Parse, then Send JSON to NoSQL.

Now connect to the MongoDB console in any convenient way and execute the following commands:

show databases

admin 40.00 KiB

config 72.00 KiB

local 72.00 KiB

testDB 72.00 KiB

use testDB

switched to db testDB

db.testCollection.find().pretty()The result should look like the following:

{

_id: ObjectId('278e1b2c7c1823d4fde120ef'),

customers: [

{

name: 'John Smith',

email: 'john.smith@example.com',

loyalty_status: 'Gold',

age: '34',

gender: 'Male',

membership_id: '123456',

purchases: [

{

product: 'Smartphone',

category: 'Electronics',

price: '700',

store: 'TechWorld',

location: 'New York',

purchase_date: '2025-01-10'

},

{

product: 'Wireless Earbuds',

category: 'Audio',

price: '150',

store: 'GadgetStore',

location: 'New York',

purchase_date: '2025-01-11'

}

]

},

{

name: 'Jane Doe',

email: 'jane.doe@example.com',

loyalty_status: 'Silver',

age: '28',

gender: 'Female',

membership_id: '654321',

purchases: [

{

product: 'Laptop',

category: 'Electronics',

price: '1200',

store: 'GadgetStore',

location: 'San Francisco',

purchase_date: '2025-01-12'

},

{

product: 'USB-C Adapter',

category: 'Accessories',

price: '30',

store: 'TechWorld',

location: 'San Francisco',

purchase_date: '2025-01-13'

},

{

product: 'Keyboard',

category: 'Accessories',

price: '80',

store: 'OfficeMart',

location: 'San Francisco',

purchase_date: '2025-01-14'

}

]

},

{

name: 'Michael Johnson',

email: 'michael.johnson@example.com',

loyalty_status: 'Bronze',

age: '40',

gender: 'Male',

membership_id: '789012',

purchases: [

{

product: 'Headphones',

category: 'Audio',

price: '150',

store: 'AudioZone',

location: 'Chicago',

purchase_date: '2025-01-05'

}

]

},

{

name: 'Emily Davis',

email: 'emily.davis@example.com',

loyalty_status: 'Gold',

age: '25',

gender: 'Female',

membership_id: '234567',

purchases: [

{

product: 'Running Shoes',

category: 'Sportswear',

price: '120',

store: 'FitShop',

location: 'Los Angeles',

purchase_date: '2025-01-08'

},

{

product: 'Yoga Mat',

category: 'Sportswear',

price: '40',

store: 'FitShop',

location: 'Los Angeles',

purchase_date: '2025-01-09'

}

]

},

{

name: 'Robert Brown',

email: 'robert.brown@example.com',

loyalty_status: 'Silver',

age: '37',

gender: 'Male',

membership_id: '345678',

purchases: [

{

product: 'Smartwatch',

category: 'Wearable',

price: '250',

store: 'GadgetPlanet',

location: 'Boston',

purchase_date: '2025-01-07'

},

{

product: 'Fitness Band',

category: 'Wearable',

price: '100',

store: 'HealthMart',

location: 'Boston',

purchase_date: '2025-01-08'

}

]

}

]

}Conclusion

In this example, we have seen how we can automate the uploading of XML files to MongoDB without having to write any code. Although the example considers only one file, it is possible within the framework of one project to a huge number of types and subtypes of files with different structures, as well as to perform quite complex manipulations, such as type conversion and the use of external services to process field values in real time. This allows not only the unloading of data from XML but also the processing of some of the values via external API, including the use of large language models.

Opinions expressed by DZone contributors are their own.